Hi!

Braindump is our attempt to imagine what game creation could be like in the brave new world of LLMs and generative AI. We want to give you an entire AI game studio, complete with coders, artists, and so on, to help you create your dream game.

With Braindump, you build top-down/2.5D games or interactive worlds by simply typing prompts. For example, typing “Create a Starfighter that can shoot lasers and drop BB-8 bombs” will generate 3D models, game data, and scripts that make your prompt come to life. You can then instantly play your game, and even invite a friend to play with you.

As we’ve worked on Braindump for a while now, we figured it’s time to do an update, share some of our learnings, and see if we can get some feedback. You can also sign up for the alpha here, if you’d be interested in trying out the product yourself and give direct feedback on it. Everyone is more than welcome to join our Discord and chat directly with us there. And there are some more videos on our TikTok account.

Anyway, let’s jump into it!

From First Experiments to Today

Braindump started around six months ago with some humble prototypes, using SVGs to represent game units.

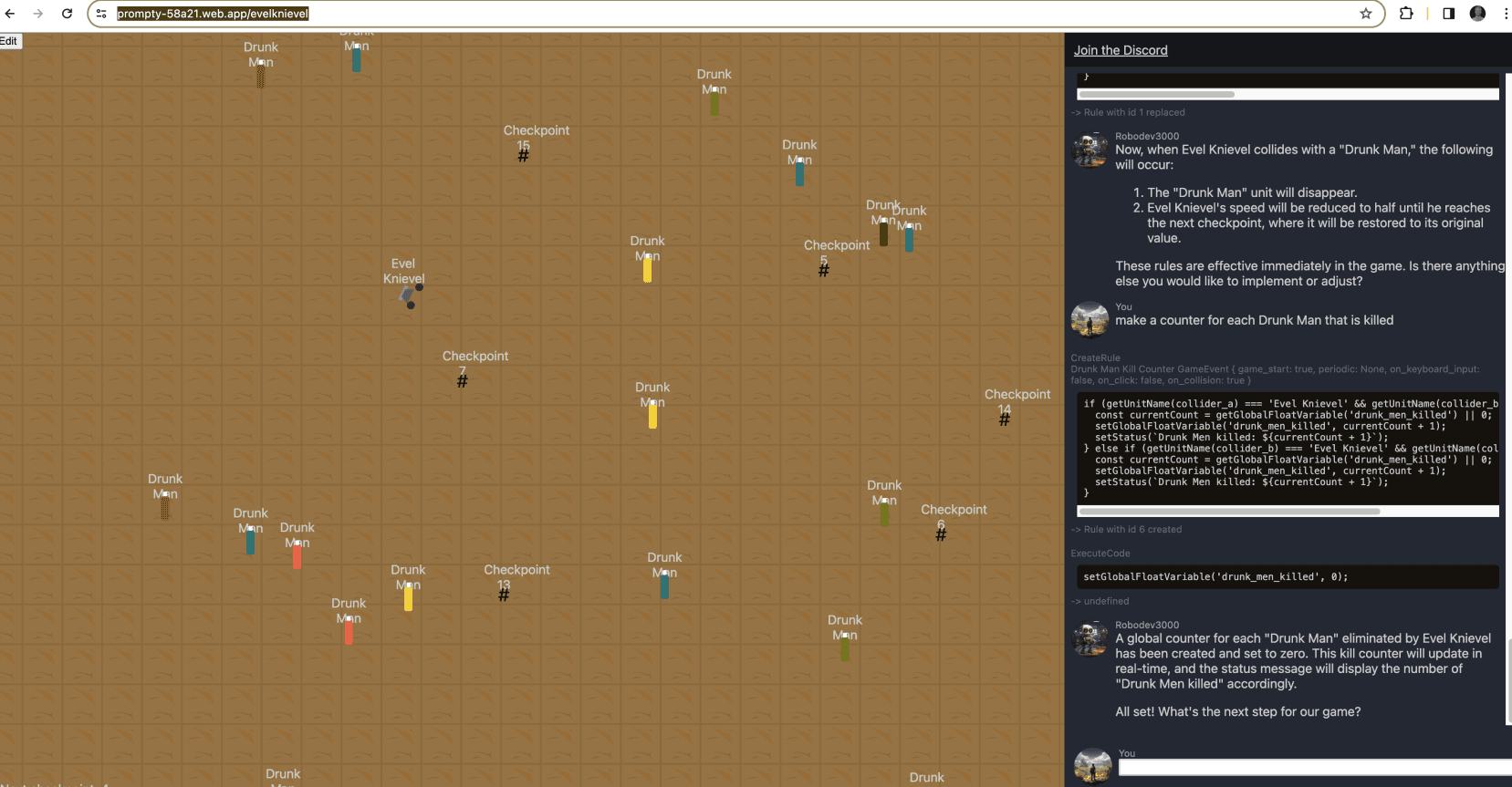

Since then, we’ve added 3D model generation, multiplayer, and we’ve reworked the UX countless times. Most of all, we’ve iterated a lot on how the prompting works. Here’s what it looks like as of now:

There are currently a couple of core operations in Braindump:

You can define units, abilities, and attributes, such as “an Orc with 50 HP that can place a magic wand.”

You can populate the game map with objects, for example, “place twenty Orcs in a circle.”

You can create game rules and logic, i.e., “When Orcs reach 0 health, create a ghost Orc in their place.”

You can create new 3D models, i.e., “I want my Orc to be pink and have fluffy ears.” We’re using Meshy to generate the 3D models.

All of this is accessible through a unified natural language prompting interface. Prompts can be started from anywhere and include all kinds of context, including positions, objects, and more. Your prompt is translated into code by a LLM and is executed by the runtime to update the game state. Building and playing is online, multiplayer and instant. Jump in, send a link to a friend, and have fun building together!

Challenge 1: Designing UX for Prompting

There are two big problems with using LLMs to help you build things: 1) how do you get the LLM to consistently do what you want and 2) what is the best UX for interacting with the LLMs? Let’s talk about the latter problem first.

We experimented with many UX paradigms before settling on our current iteration. One of the first ones we tried was “generate a game from a description,” but it quickly broke down. Imagine that you’ve hired a game studio to build a game for you, but you only get one chance at perfectly specifying your game to them. There are zero feedback rounds; they will build their best interpretation of what you’ve specified, which is unlikely to be what you imagined. LLMs have the same problem.

In fact, a lot of problems with LLMs are strikingly familiar if you just replace the word “LLM” with “developer” or “game studio.” In many cases, we’ve found that the reason it can’t do what we want it to do is simply because we haven’t given it the right information or context to accomplish the task.

This also goes for users. Working with an LLM is a bit like being a boss; you need to clearly articulate what you want, as it can’t read your mind. The difference from a human employee, though, is that the LLM will carry on regardless of the input and dream up whatever response it can so that it can output something. We’re looking into how we can get the LLM to ask for clarification, but it’s a balancing act as you do want it to guess a lot of the time. If I type “Create an Orc,” then I don’t want to be asked 20 follow-up questions to exactly specify the nature of the Orc; I just want it to take a guess. Figuring out exactly when a user expects it to ask for clarification and when not is one of our bigger challenges.

After experimenting with taking a whole description and generating a game from it, we switched to a more iterative approach. By building up the game over many prompts, you have a chance to go into more detail or iterate on things. For example, I may ask it to “Create an Orc,” and once it’s done that, I may start adding details to that Orc: “Make it afraid of ducks” or “Give it sunglasses.”

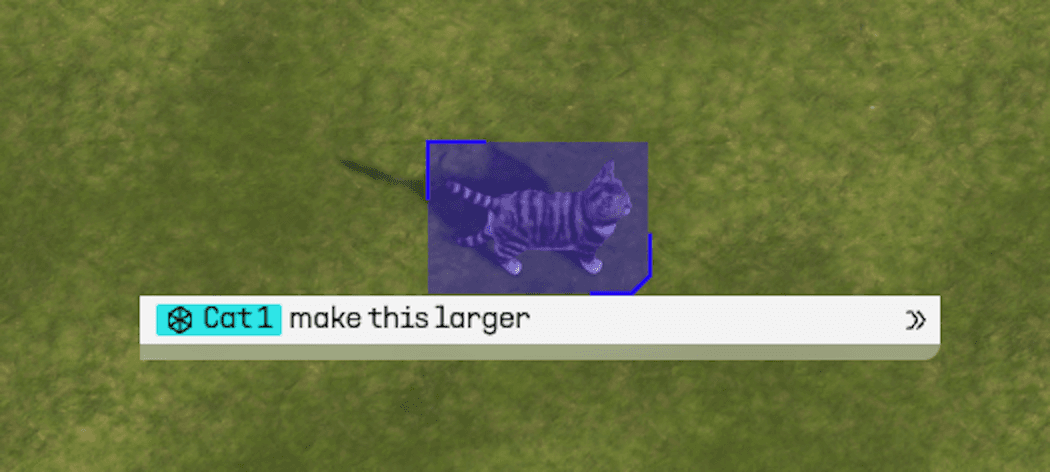

We also quickly discovered that we wanted to be able to click on things to “talk” about them because, without that, it’s quite hard to describe what you’re talking about. “That tree in the middle of the forest, next to the big rock, and two meters down from the lake” gets tedious quickly compared to just clicking the tree and saying “change this tree.” We’re still trying to find the right balance between prompting and traditional controls, and are actively experimenting in both directions.

Challenge 2: Designing a Game API for LLMs

Alright, we’ve covered how to interact with the LLM from a UX perspective. Let’s talk about the code it writes for us now.

Initially, we tried to get GPT to generate code for an existing game engine (we tried Three.js, a couple of JavaScript game engines, and working with the DOM directly), but we realized that, even though it’s quite good at generating code snippets, it struggles with bigger pieces of software and building and maintaining software architecture.

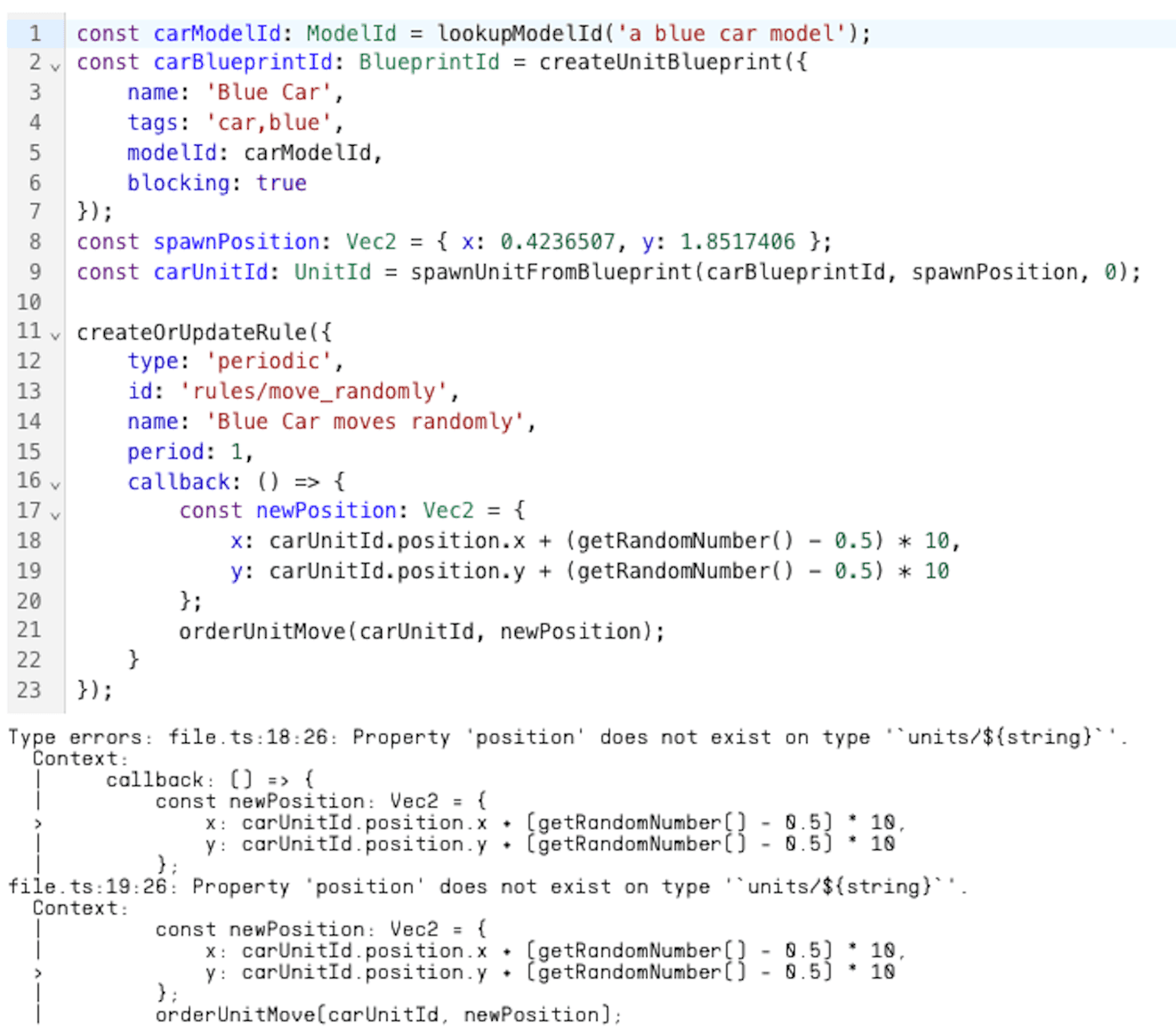

Instead, we built a much more streamlined “game API” in TypeScript, which provides as much structure as possible, so that the LLM can focus on filling in code and data.

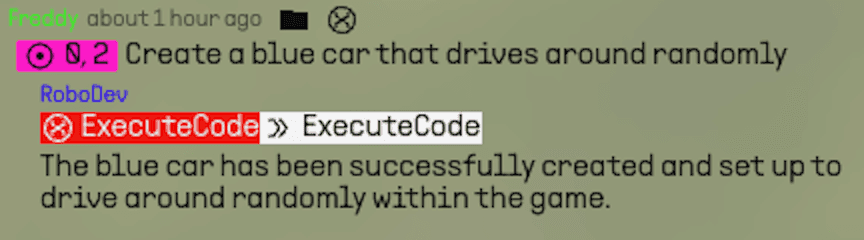

As an example, here’s the code generated for the prompt "Create a blue car that drives around randomly”. We don’t permit it to write classes, functions or any other “code structure”. Instead, we force it to create “blueprints” and output “rules”, which obey a strict structure. These are also shown to the user in the UI so you know what it’s done:

From our Game API we generate type definitions (.d.ts) which are fed to GPT in the system prompt. GPT seems to get the cue; it consistently uses our API and mostly does it correctly on the first try.

The type checking also has provided us with a surprise benefit; GPT will actually try to self-correct when it sees that it has made a type error. All we had to do was give it the errors, and as soon as we did, it automatically started with this behavior. For the car prompt above, the LLM made an error in its first attempt, and then self-corrected with its second attempt:

Generated Macros

The game API has also opened up another interesting UX flow: generated macros.

Normally, a macro in an application is a small program that lets you automate some task. For example, converting every second row in a spreadsheet from one currency to another.

In our system, however, all prompts generate code, and that code can automate pretty much anything covered by the game API.

As an example, I can type prompts such as “Place a tent next to each campfire,” and GPT will happily do so:

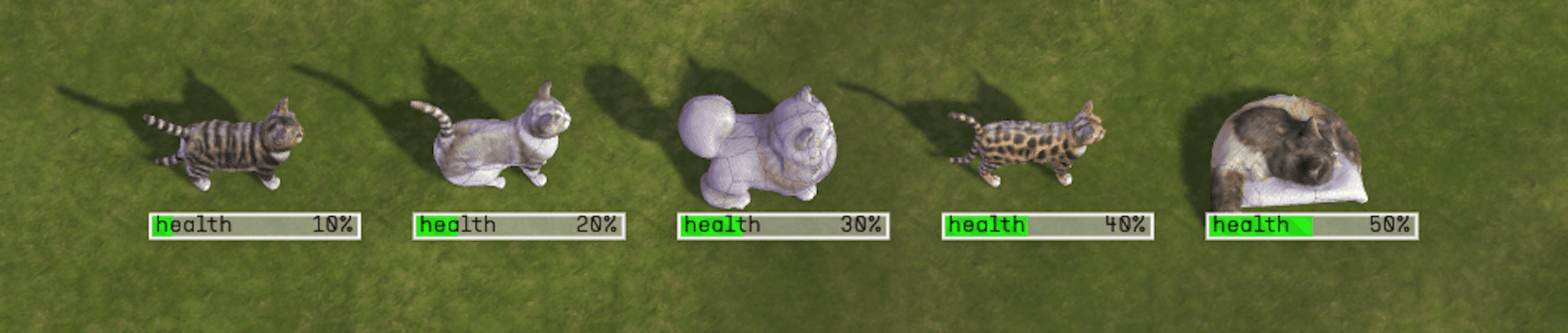

Or I can have it automate tedious tasks, such as “Create five different cats with different stats.”

I can even ask it questions that require computation to answer, such as “How many tents are there that are facing north?” GPT will generate some code that counts the tents based on their rotation:

This opens up a whole new way of working. It’s a bit strange at first, as we’re not really used to being able to do these things with programs. Once you get used to it, however, you can find creative ways to complete what would otherwise be very tedious tasks in a matter of seconds.

Collaborative Editing with AI

We wanted everything in Braindump to be multiplayer: both the creation of games and the playing of games. We’ve supported multiplayer editing from the very first version. At the beginning, we just had one big chat, which everyone could contribute to. This proved chaotic, though, even with just two people. The main problem is that you’re often working on two different things, which may not be relevant to each other. This confused both users and GPT!

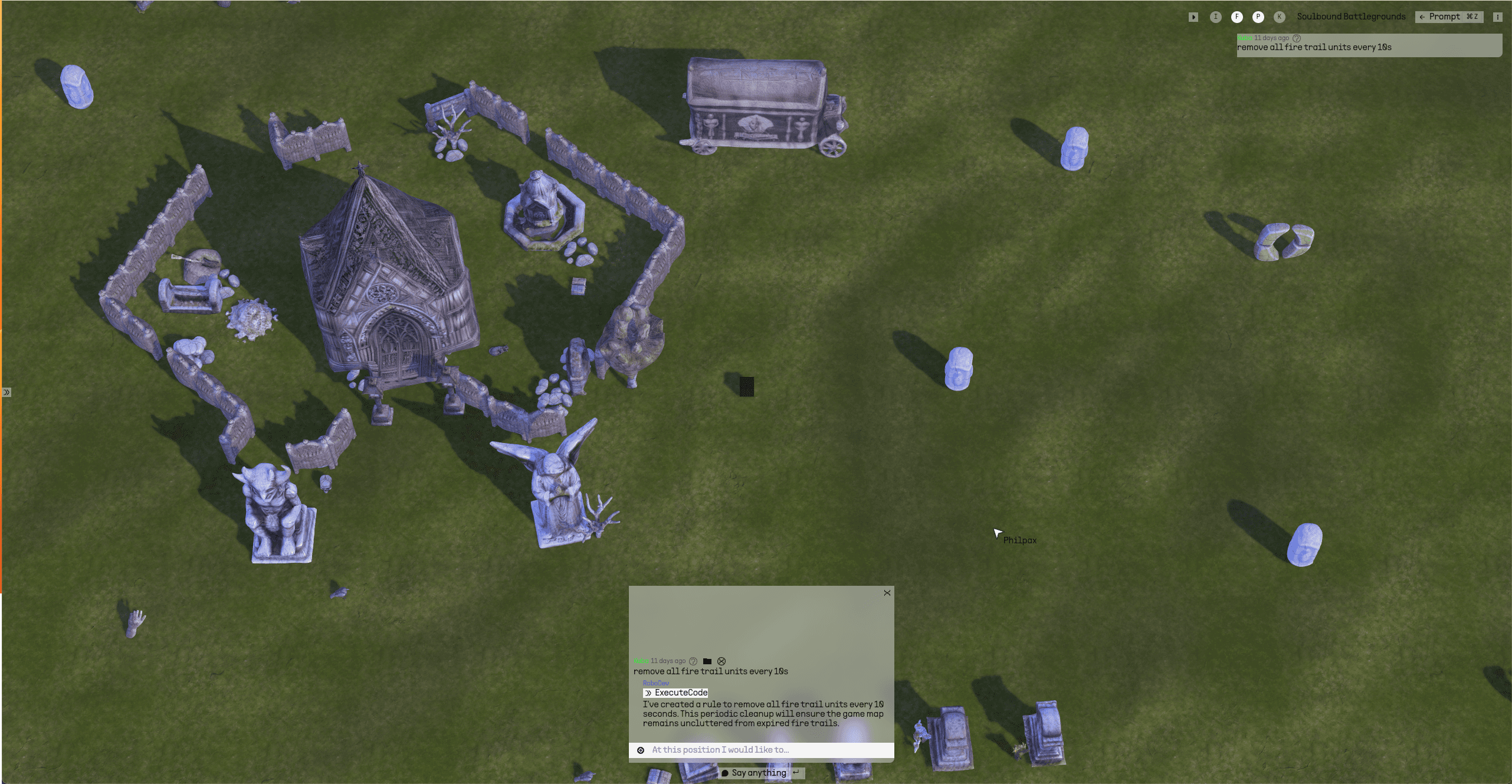

After trying several solutions, we converged on something we call “threads.” This lets you initiate a prompt from anywhere in the world, like this:

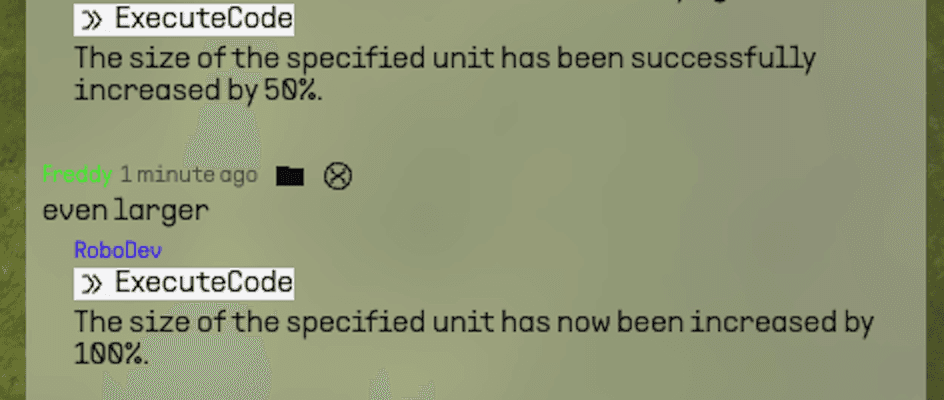

But you can also refine or add to that prompt when needed, like this:

The thread is given the latest game state to begin with, but it doesn’t have the full history of the project. Multiple threads can be “running” at the same time, but only one prompt can be running per thread at a time.

So far, this has worked pretty well. In our testing, we’ve had five people working in the same world simultaneously, which was definitely a bit chaotic but still functional. We’re actively exploring ways to help users coordinate effectively.

Benchmarking and Testing

To evaluate the performance of our prompt engine, we’ve developed a benchmarking tool. The tool runs dozens of scenarios we’ve specified, each with their own prompts, and then uses GPT to evaluate the success of those prompts.

Here’s an example of a benchmark:

As you can see, we simply specify the “expected” conditions using natural language. A second GPT, “the evaluator” (with its own system prompt), is provided these conditions, the state of the simulation at completion, and any errors encountered, and is asked to judge whether or not the test was successful, including a critique of what went wrong.

Our test suite is in its early days still, but we’re constantly adding more and more tests as we discover new prompting styles and failure cases.

(Just as we were finishing up this blog post, GPT-4o was released and our test suite went from 80% successful to 91% successful)

Why We’re Building Braindump

Personally, I’ve always loved games and creativity. I learned to code so that I could create games. To me, generative AI is naturally the next step in productivity enhancement; with it, you can simply do more. As big studios are getting more and more conservative with the games they build, I’m excited to empower small groups or even individuals to build their dream games. I want to see what crazy ideas people come up with and realize when they have an entire AI game studio at their fingertips.

Next Up

Braindump is just getting started. Although it’s pretty good at executing “commands” right now (“Create a cat”), we know that we can expand that to handle much more vague or “big” tasks as well. Some of the things we’re looking into are:

Supporting “bigger” prompts through planning

Getting GPT to stop guessing and instead ask the user for clarification

Improving code quality by making GPT critique its own work

Improving discoverability and inspiration (“what can I build with this?”)

Improving game engine capabilities in an LLM-amenable way

Wrapping up

If you’ve stuck with me all the way down here, then thank you for reading this, and I hope it was interesting!

If you would like to try Braindump yourself, then make sure to sign up for alpha testing. We’re letting in the first few people in the coming weeks. You can also follow us on Discord, Twitter, TikTok, and YouTube, where we’ll be posting updates.

Hope you’ve enjoyed it, and until next time!

/Fredrik Norén, CPTO Braindump

About Us

We’re a small company based in Stockholm, Sweden. There are currently six of us with many years of experience from everything from Spotify and Snapchat to AAA game studios. You can find us on LinkedIn.